I was very impressed with Sissel's Lean Six Sigma knowledge. She makes it easy to identify improvements and create results.

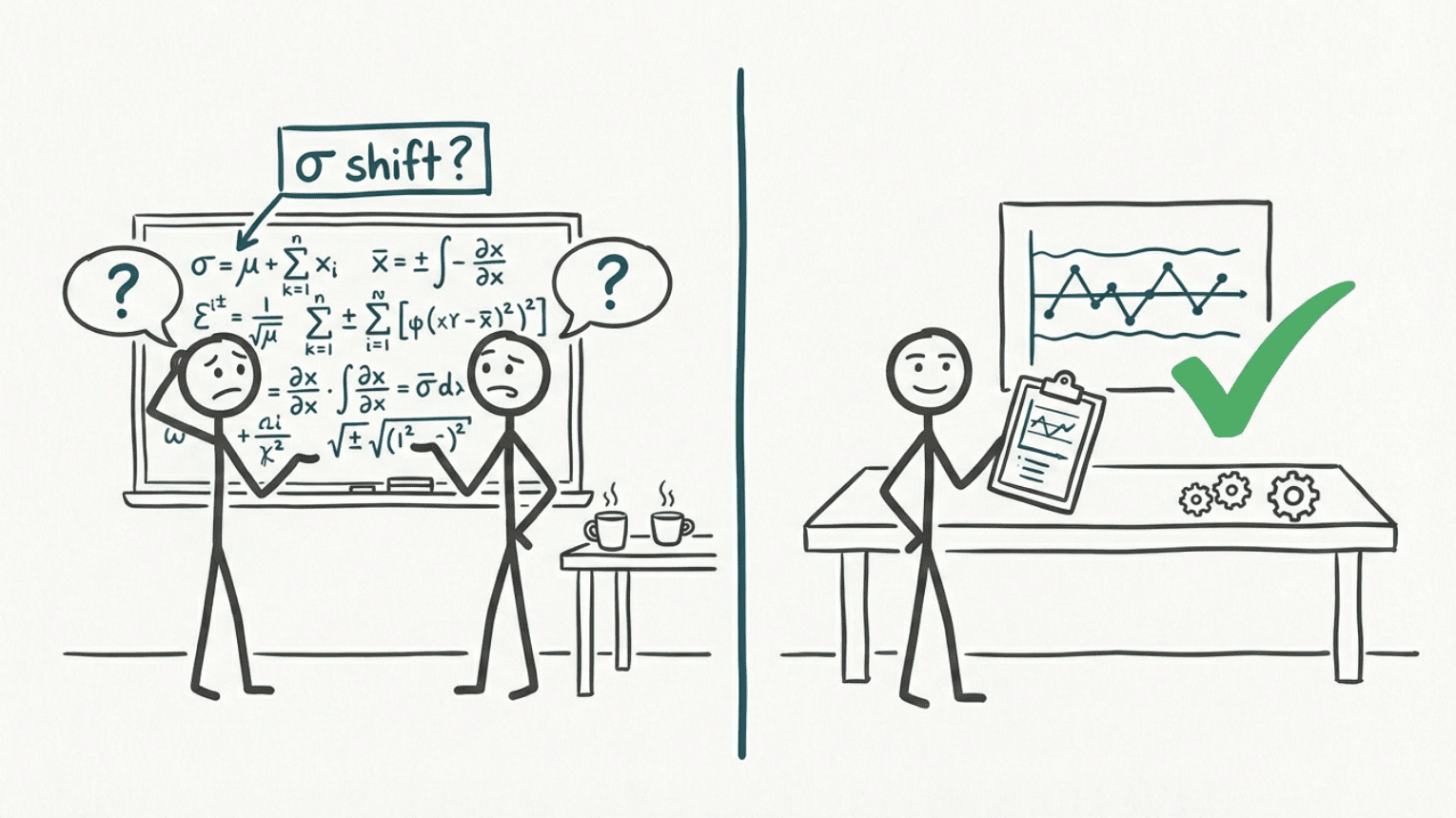

Even within Six Sigma - a method built on facts - experts disagree on fundamental numbers. While they debate, we use the time to actually improve processes.

Ask two Six Sigma experts how many defects a "6-sigma process" has, and you get two answers:

"3.4 per million" says one, pointing to the table that appears in all Six Sigma courses.

"2 per billion" says the other, proving it mathematically.

Both are right. Both can document it with statistics.

I discovered this when I checked the numbers myself. The table used in Six Sigma training states 6.7% errors for a 3-sigma process. But 3 sigma corresponds to the control limits in SPC, where the probability of values outside is 0.3%.

The numbers didn't match.

After sharing my findings in a Lean Six Sigma group on LinkedIn, I got the answer: The famous 1.5 sigma process shift. A correction for long-term variation that some use, others don't.

Thus the explanation for why experts disagree.

But here's the most important question: Does this change ANY PRACTICAL DECISIONS in your production?

The answer is probably: No.

Situation: Even Six Sigma experts disagree on fundamental statistical numbers and which correction factor to use.

Insight: The discussion about theoretical precision doesn't help production. While experts debate how many errors there are in a six sigma process, the process still produces scrap.

Signs to look for: Meetings where you discuss details without making decisions. Teams waiting for perfect understanding before starting. Theory that stops action.

Next step: Start with good enough understanding. Act while others discuss. Improve the process with the tools you have.

What this is about: The difference between academic precision and practical utility. The difference between understanding theory perfectly and creating results in reality.

Why it happens: We think we must understand EVERYTHING before we can start. We discuss theory instead of testing in practice. We search for the perfect answer, while the good answer waits to be used.

How you recognize it:

• The team debates whether to use 25 or 30 data points before starting SPC, while the process produces scrap

• The quality manager insists everyone must understand statistical theory before using control charts, so no one uses them

• Management wants the "right" solution before testing anything, so nothing gets tested

• Operators ask "how many measurements do we need?" instead of starting with the data they have

Perfect understanding never comes. Good enough understanding delivers results now.

The question is not: "Is it 3.4 defects per million or 2 defects per billion in a 6-sigma process?"

The question is: "How do WE reduce errors in OUR process, now?"

It's not about perfect statistical understanding, academic precision in calculations, or winning debates about theory.

It's about starting with the data you have, separating signal from noise well enough to act correctly, and improving the process while others discuss.

Maybe you're not discussing Six Sigma statistics right now. But I bet you recognize the dynamic:

• You spend hours in meetings discussing details that don't change the decision

• The consultant spends three hours explaining normal distribution, while operators just want to know "should I adjust or not?"

• The team waits for someone to have the perfect answer before you start testing

• You're more concerned about whether the method is "correct" than whether it produces results

• Theoretical understanding is valued higher than practical improvement

While you discuss, the process continues to produce scrap. While you wait for perfect understanding, your competitor improves with good enough knowledge.

Step 1: Start simple

You don't need perfect understanding to start. Do you need to know how many errors per million produced to determine if YOUR process is stable? No. A simple control chart tells you what you need.

Step 2: Use "good enough" SPC

A simple control chart with ±3 sigma limits tells you: Is the process predictable? Should we adjust now, or wait? That's 90 percent of the value, with 10 percent of the complexity.

Step 3: Improve while others discuss

Perfect theory won't reduce your scrap. Action based on good enough understanding will. Start with what you have. Adjust based on experience. Learn along the way.

Don't we need to understand statistics to use SPC?

You need enough understanding to separate signal from noise and act correctly. You do NOT need to prove formulas or win debates with statisticians. Good enough beats perfect.

How much data do we need before we can start?

Start with what you have. 20 data points is better than none. Perfect precision comes with more data over time. Waiting for perfect data gives zero improvement.

What if we make mistakes in the beginning?

Then you learn and adjust. That's better than waiting for perfect understanding while the process produces scrap. Mistakes you learn from are better than perfection that never starts.

Isn't Six Sigma based on statistical precision?

Six Sigma is based on reducing variation and improving processes. Statistics is the tool, not the goal. The tool doesn't need to be perfect to be useful.

Why is it important to question established truths?

We can have different perceptions of reality. That's why it's important to ask clarifying questions to get a shared picture of reality.

This story is from our weekly newsletter, where we share experiences. Short stories for those who want to solve problems at the root and achieve measurable, lasting value creation.

Sign up for our newsletter:

If you want to learn more about the topics in this post:

• Learn SPC as it's actually used in practice, not academic theory

• Before you ask "why", ask "how often"

• Before you chase process errors, check the measurement system

Lean Tech AS | Kristoffer Robins vei 13

0047 481 23 070

Oslo, Norway

L - Look for solutions

E – Enthusiastic

A – Analytical

N - Never give up